Designing Brain-Based Robots

- Published27 Mar 2013

- Reviewed27 Mar 2013

- Author Susan Gaidos

- Source BrainFacts/SfN

Computers have changed the way we live, work, and think. Today’s “smart” machines can understand speech, play chess, and even beat some of the best champions at "Jeopardy!” But, for all computers can do, none of them are capable of fully outperforming their creators. Yet.

Computer models that simulate what goes on inside the brain may help us better understand how the brain works and ways to create computers that act more like humans.

Scientists are constructing models that simulate what goes on inside the human brain to help better understand how the brain works and discover new ways to create computers that act more like humans.

Although the brain is sometimes compared to a computer, scientists working to build such models are quick to point out the differences between the two.

Hardware, software differences

For starters, the brain isn’t wired like a computer, says theoretical neuroscientist Chris Eliasmith, of the University of Waterloo, in Ontario.

“One way to describe the difference between digital computers and the brain is in terms of the architecture,” he says. “That is, in how the components are arranged and what each of the components do.”

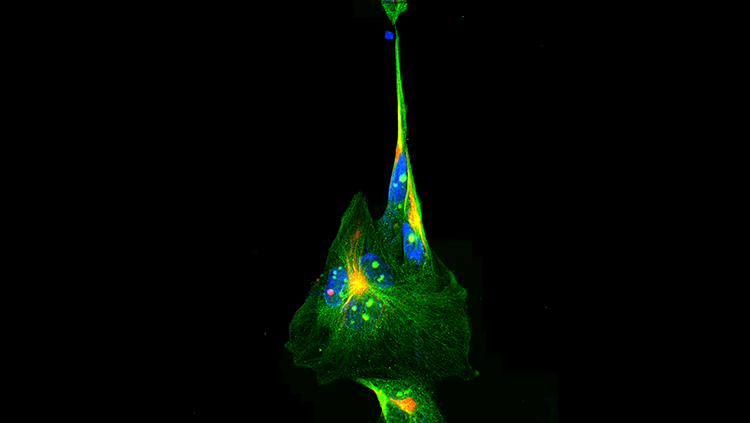

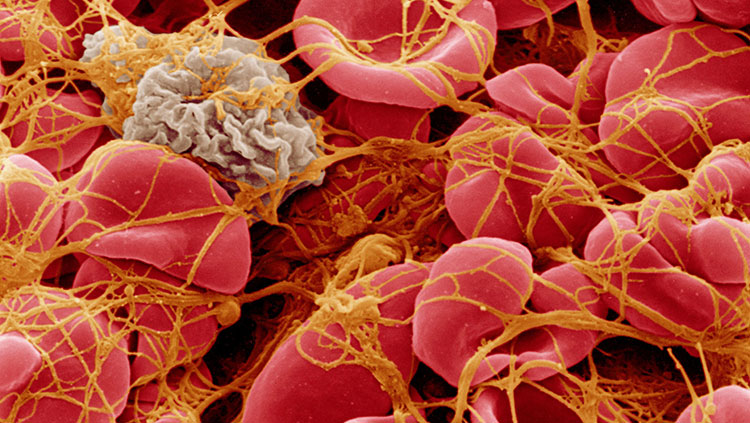

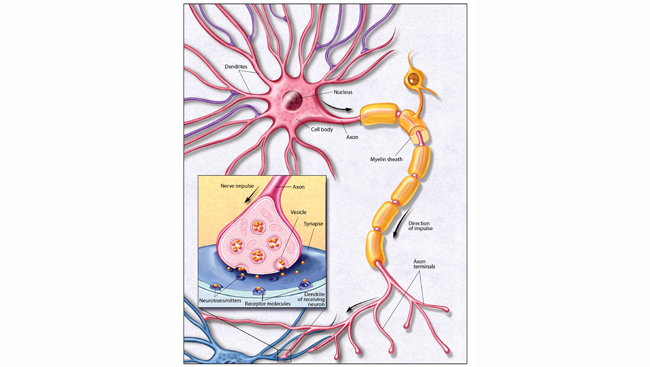

While the hardware of a computer is an elaborate collection of electric on-off switches, brain cell communication is more variable. Sometimes a cell will send a chemical message across a synapse — the junction between cells — and sometimes it won’t. Instead of treating all incoming signals alike, as a digital circuit does, neurons can give added weight to pulses coming from a favorite source. This flexibility allows the brain to be less precise in its calculations, but more energy efficient than a computer, because it doesn’t require as much energy per computation.

Unlike a computer, your brain is capable of continuously changing, or updating its hardware, in response to new experiences. Over time, the connections between some brain cells are strengthened, and in some cases, cells can be added or removed. Computers don’t have this kind of flexibility. While updated software can instruct computers to perform in different ways, their hardware is unchanging.

Brains and computers both store and retrieve information, but they go about doing it in different ways. Computers typically run a single software program that has access to all the memory inside the computer. In the brain, no single nerve cell has access to all information. Instead, individual cells communicate with hundreds of nearby cells to access information. Millions of nerve cells combine signals simultaneously, forming circuits to process information or plan a sequence of actions.

A more human model

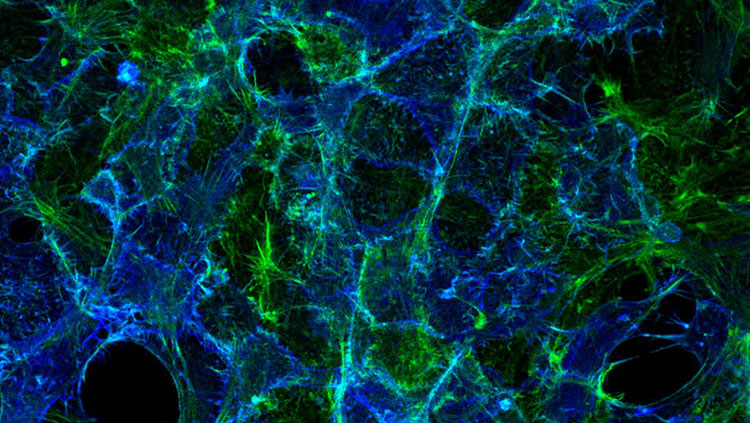

In recent years, the way in which systems of neurons in the brain interact as a network has become the inspiration for massive parallel-processing architectures and models that process information in human-like ways.

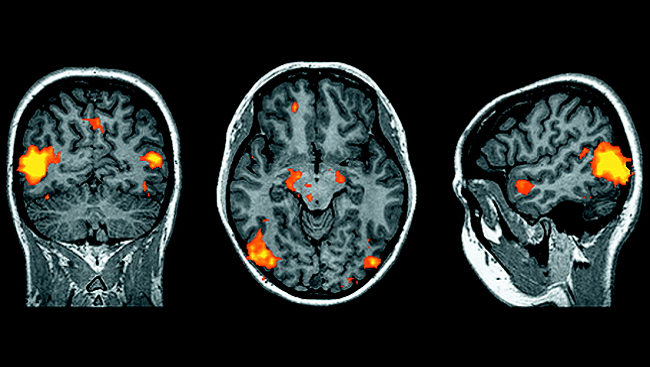

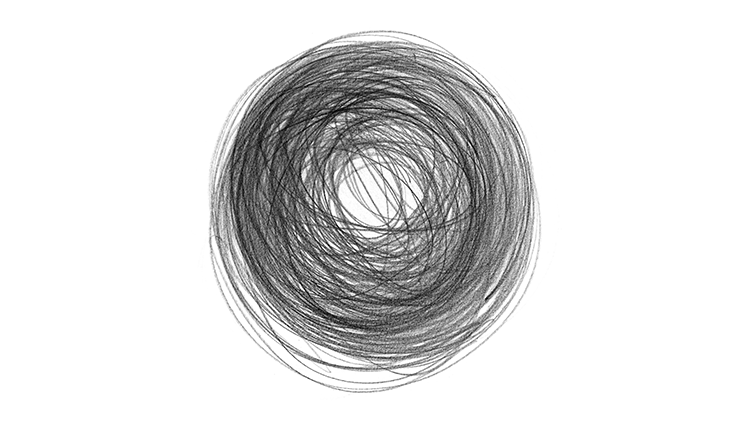

Eliasmith designed a large-scale computer model he named Spaun (short for Semantic Pointer Architecture Unified Network). Using about 2.5 million simulated “nerve cells” that mimic some of the brain’s physiological properties, a simulated eye that sees, and an arm that draws, Spaun is able to recognize, process, and jot down numbers and lists of items when commanded.

Models such as Spaun empower scientists to screen theories about how the brain directs information through itself, Eliasmith says.

“Because we have access to all of the data that the model generates, we can record activity patterns that occur as [the model] performs various functions. We can then look in a real brain to see if the patterns of activity are similar across the two.”

Learning from experience

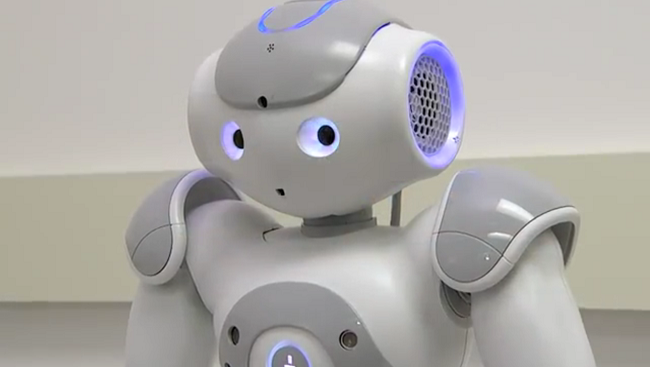

At the Technical University of Munich, researchers are designing mobile robots that can see, move, and lift objects. The scientists are developing models — that run on massive parallel processors — that allow the robots to learn concepts that have not been pre-programmed based on their sensory experience.

“Humans do this naturally all the time,” says Jörg Conradt, a neuroscientist working on the project. “We fuse information from what we see and how we move with what our sensory systems tell us.”

Over the past two years, the team has seen signs the robots can modify their behaviors in response to changes in the environment. In one study, a robot learned to adjust its position when lifting a glass, based on how much water was in the glass. Another study found near-sighted robots learned to move closer to objects for observation, just as people would, to make up for their shortsighted camera-eye.

“In the near future I expect we’ll see more adaptive, flexible robots, that can survive in a human world, not only in an environment that is prepared for robots,” Conradt says.

Information on the workings of such robots will also provide a better understanding of fundamental computing principles performed in brains, he adds.

CONTENT PROVIDED BY

BrainFacts/SfN