ICYMI: Different Areas of the Brain Process Lyrics and Melody

- Published4 Mar 2020

- Author Alexis Wnuk

- Source BrainFacts/SfN

These were the top neuroscience stories for the week of February 24, 2020.

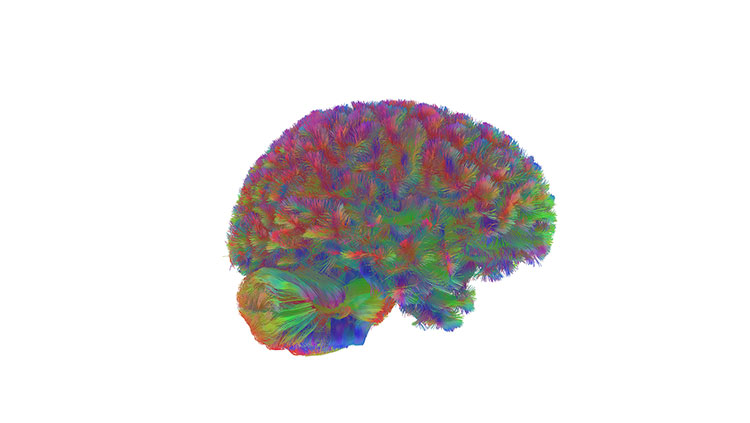

Lyrics and Melody Decoded Separately in Brain

Speech and music are two of humans’ most unique — and complex — cognitive abilities. A study published February 28 in Science offers a few clues about the neural hardware underlying these skills. It suggests different parts of the brain specialize to detect specific kinds of acoustical information, whether it’s speech or music. (A competing theory suggests music and speech are processed in separate brain networks.) Researchers recorded short a cappella songs and then altered them, tweaking either the frequencies of the sounds or how the sounds changed over time. When the frequencies changed, participants in the study couldn’t discern the melody. When the researchers changed how the sounds changed over time, the participants could hear the melody but could no longer decipher the words. To see what was happening in their brains, the researchers performed the same experiment on a smaller group of participants while their brains were scanned with fMRI. They found speech activated the brain’s auditory cortex in the left hemisphere, while melody perception took place in the corresponding area in the right hemisphere. The results of the study suggest different areas of the brain are specialized to detect specific acoustical information, whether it’s from speech or music.

Related: Learning About Language From Birdsong

Read more: How The Brain Teases Apart A Song's Words And Music (NPR)

AI-Enhanced Robots Help Autistic Children Learn Social Skills

Robots can help autistic children learn important social skills. Unfortunately, robots can’t pick up on subtle cues that a child is losing interest. Artificial intelligence may be able to help, as demonstrated in a study published February 26 in Science Robotics. A team of researchers employed a machine-learning algorithm to assess engagement, and for a month, they recorded autistic children as they interacted with an assistive robot named Kiwi in their homes. The kids played math games on a tablet while Kiwi provided verbal and expressive feedback on their performance. A video camera tracked the children’s gaze, and microphones recorded their conversations — when fed the data, the algorithm correctly predicted whether or not the child was engaged with 90% accuracy. The findings could help scientists develop robots that can tell when a child is becoming distracted and offer additional feedback to regain their attention.

Related: Humanoid Robot "Russell" Engages Children With Autism

Read more: Robots that teach autistic kids social skills could help them develop (MIT Technology Review)

Opioid Epidemic May Have Claimed More Lives Than Initially Thought

The U.S. opioid epidemic may be more deadly than previously thought. In fact, it may have claimed an additional 100,000 lives between 1999 and 2016. Scientists document that 28% increase from previous estimates in a February 27 paper in the journal Addiction. Reports of drug overdose deaths usually don’t list the specific drug the person took. In the new study, researchers found that was true more than 20% of the time. Using data from drug overdose deaths where the drug was known, the researchers created a mathematical model to estimate the additional number of deaths that could be attributed to opioids. The model estimated opioids were responsible for an additional 99,160 deaths, bringing the total death toll to 453,300.

Related: The Human Cost of Substance Use Disorders

Read more: The Opioid Epidemic Might Be Much Worse Than We Thought (The Atlantic)

CONTENT PROVIDED BY

BrainFacts/SfN

Also In Neuroscience in the News

Trending

Popular articles on BrainFacts.org