Mapping the Air

- Published7 Aug 2012

- Reviewed7 Aug 2012

- Author Aalok Mehta

- Source BrainFacts/SfN

Hearing may seem simple, but it actually takes a large amount of effort by the brain. Researchers studying barn owls — some of nature's most expert listeners — are unraveling the complex calculations the brain makes to identify a sound’s location. These discoveries are helping scientists understand how the brain learns new things in general, why languages become more difficult to learn as we get older, and how to improve hearing aid devices.

Making Maps From Sound

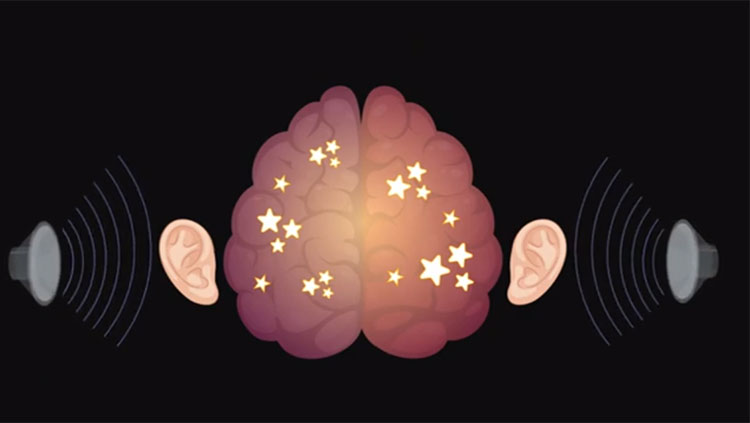

You hear an unexpected noise, and instinctively turn around to face its source. It seems like the easiest thing in the world. To the brain, however, it's anything but simple, requiring a complex set of calculations. By studying the razor-sharp hearing of barn owls, however, researchers have been able to trace the brain networks that oversee hearing and help us make sense of the sounds around us.

From Sight to Sound

Early research into how the brain processes information focused mostly on vision. In the 1960s, landmark studies of the visual systems of cats inspired scientists all over the world to study the senses. When it came to hearing, however, scientists made the best progress when they switched from studying cats to barn owls. Although all mammals have good hearing, barn owls are especially astute; they can hunt down mice in total darkness, for instance.

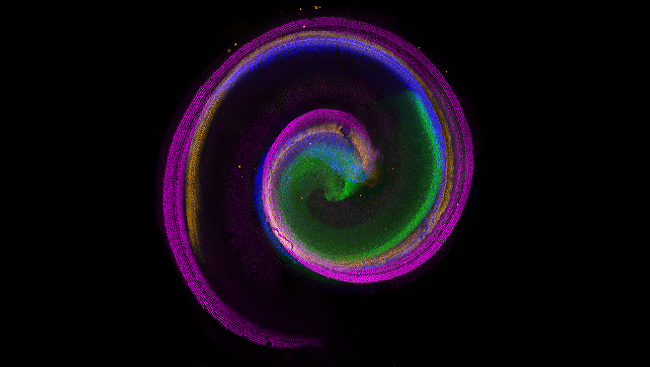

Applying the same techniques developed by vision researchers to barn owls, Masakazu (“Mark”) Konishi and Eric Knudsen helped figure out how the brain processes sound and uses it to create mental maps. By playing sounds in different locations while using electrodes to track brain activity, Konishi and Knudsen found an area in the midbrain where representations of sounds are precisely mapped to areas of space surrounding the owl. No matter what the sound was or how loud, brain cells in this area responded only to its location. These auditory maps mirrored similar maps found in the vision-processing areas of the brain.

This was a remarkable and surprising discovery, because ears don't respond to information spatially like the eyes do. The light-sensitive cells in the back of the eye are already laid out in the form of a map, and they naturally record location information. In contrast, ears only track the loudness and frequency of sounds. Konishi and Knudsen's work led to a flurry of new studies looking at how the brain is able to process basic types of sensory information into detailed and highly accurate maps of space.

A Finely Tuned System

In the decades since the discovery of auditory maps in the brain, researchers have deciphered many of the tools used to process sound location.

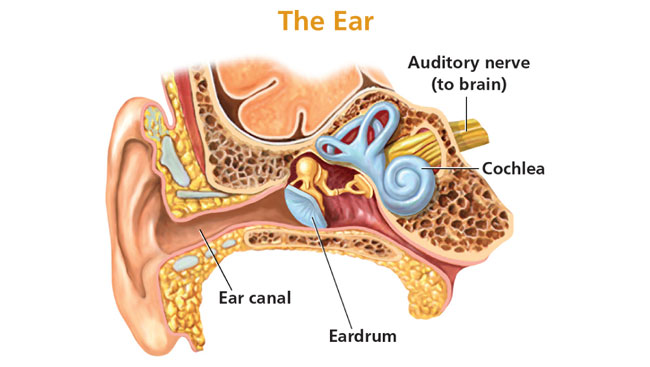

From studying the structure of the ear, scientists already knew that hearing is based on the frequency of sound waves — what we perceive as pitch. In essence, the ear notes all at once what sounds are produced at each different frequency. The key to identifying the location of sounds, scientists found, is then contrasting tiny differences in the sounds received by each ear. In particular, the brain simultaneously analyzes differences in the timing and in the loudness of sounds. For instance, a sound to the left might reach the right ear slightly later and slightly softer than the left ear because it must pass around the head.

After it travels through the ear, sound information is still largely organized by frequency — even information about differences in timing and loudness. To create an accurate map, the brain extracts auditory location information in an area of the midbrain called the inferior colliculus. This allows the brain to pinpoint a specific location for each sound source.

Connecting Maps for Hearing and Vision

Since this profound discovery, scientists have continued to explore how maps are made and used by the brain. One natural extension was to explore how the auditory and visual systems interact to calibrate and improve the maps each makes separately. Researchers found, for instance, that the brain combines auditory maps with maps from the visual system. In the resulting higher-order spatial maps, the source of the information — sound or sight — no longer matters, just the interpretation of where different objects are located. These in turn interact with systems controlling sophisticated behaviors such as attention and movement. For instance, the sound or sight of a dangerous predator might cause an animal to instinctively focus its eyes in that direction while simultaneously priming its wings for flight.

In this way, the ability to develop sophisticated spatial maps is of great importance to the survival of many species, including humans.

Better Solutions for Hearing Loss

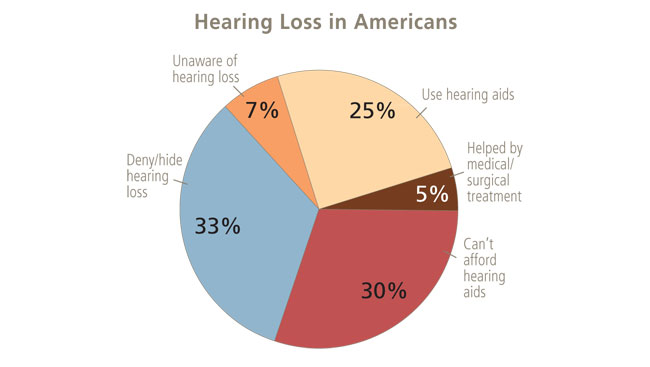

Understanding how the brain turns sound information into geographic information is leading to improved treatments for hearing loss, which affects 278 million people worldwide, according to a 2005 World Health Organization estimate.

Cochlear implants, for instance, are surgically implanted devices that send electric signals directly to auditory nerve fibers. Unlike hearing aids, cochlear implants can restore hearing in people with damage to sensitive inner-ear hair cells. Most people receive an implant in only one ear, however, leading to particular problems with determining the locations of sounds.

Better understanding of the algorithms the brain uses to process sound, based on barn owl research, has already led to an increased push for implants in both ears. However, problems with localization persist even in people with two implants, because they tend not to be synchronized very well. Researchers are actively translating basic science research into better methods for matching the information sent by cochlear implants to natural timing and loudness differences — resulting in better hearing.

From Hearing to Learning and Language

Because auditory brain pathways have been explored in such detail, researchers believe studying hearing in animals such as barn owls is an ideal way to study learning in general.

One line of research looks at how animals “calibrate” auditory maps. Young owls, for instance, are able to quickly adjust their auditory maps when information from the eyes and ears stops matching, for instance, if they are given vision-distorting goggles. However, this plasticity — the ability to form new brain connections for processing sensory information — declines steeply with age, just as it does with vision, reflecting a critical developmental period for hearing.

Language learning has similar critical periods: windows of time during childhood when it is easy to learn to distinguish new sounds. By studying auditory plasticity, scientists hope to develop ways to extend or reopen critical periods for language learning. Together, these findings have important implications not only for science and health, but also for policy and education, including calls to teach foreign languages earlier in life.

CONTENT PROVIDED BY

BrainFacts/SfN

Also In Hearing

Trending

Popular articles on BrainFacts.org