Neuroscience: Informing the Next Generation of Computers

- Published1 Oct 2008

- Reviewed2 Oct 2012

- Source BrainFacts/SfN

Technology is a key driving force for progress in neuroscience — from advanced computing to high resolution microscopes. For example, from very thin brain sections, researchers are able to reconstruct the way actual neurons connect to each other and computers can display the data in ways that increase scientific knowledge about brain function. How are neuroscientists returning the favor? Scientific findings about the brain are indeed informing advances in technology, notably in efforts to enhance the power and performance of intelligent computers.

Computer entrepreneur Jeff Hawkins delivering the “Dialogues between Neuroscience and Society” lecture at Neuroscience 2007.

Joe Shymanski, copyright Society for Neuroscience

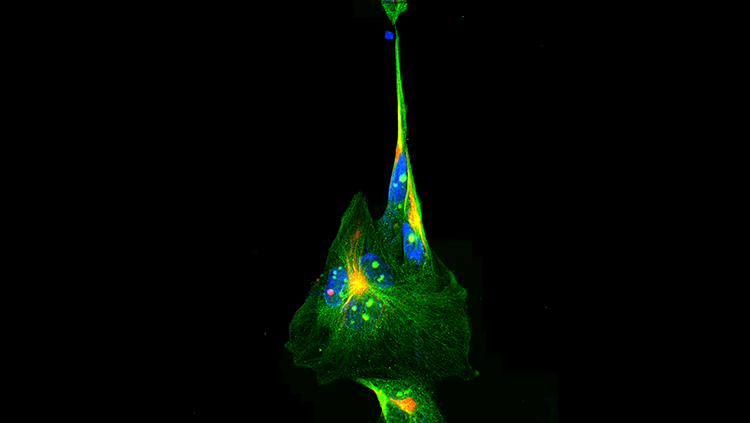

Three-dimensional computer-generated images show intricate patterns of connections between neurons that “wire” the brain. These wiring patterns shed new light on how the brain processes information.

Winfried Denk at the Max Planck Institute for Medical Research

Leading computer entrepreneurs are now working to integrate concepts of neural computation into the development of the next generation of computers. Neural computation studies how neurons in the brain transfer and process information. This broad field of study includes an effort to understand the hierarchical structure of the human neocortex, the region of the brain that is involved in complex processes like memory storage and decision-making. If a computer were able to mimic the incredibly sophisticated memory storage and retrieval system of the human brain, yet do so at a much faster rate, computer technology could be faster, more efficient, and more intuitive, while also potentially being trained to conduct analysis based on learning and prediction.

At Neuroscience 2007, Jeff Hawkins, the architect of the PalmPilot mobile technology tool, tackled this very question when he delivered the third lecture in the “Dialogues between Neuroscience and Society” series, entitled “Why Can’t a Computer Be More Like a Brain?” Hawkins, long interested in neuroscience and also a founder of the Redwood Neuroscience Institute, discussed the past and future of computing, particularly how biologically inspired principles could drive a range of advances in the coming decade. Hawkins discusses the possibility of building intelligent machines and what one might look like in the last chapter of his book, On Intelligence: “What makes it intelligent is that it can understand and interact with its world via a hierarchical memory model and can think about its world in a way analogous to how you think and I think about our world,” he writes. “Its thoughts and actions might be completely different from anything a human does; yet it still will be intelligent.”

Hawkins came to the topic based on a longtime interest in neuroscience. Following a degree in electrical engineering, he initially pursued an academic path of studying neuroscience. But other interests beckoned and, in 1992, Hawkins was a co-founder of Palm Computing, eventually bringing to market the PalmPilot and Treo smartphone, which have been leaders in mobile technology for their intuitive ease of use.

In March 2005, Hawkins and Palm co-founder Donna Dubinsky founded Numenta, Inc., along with Stanford graduate student Dileep George, to develop a new type of computer memory system based, in part, on brain function. Specifically, Numenta’s concept for Hierarchical Temporal Memory (HTM) technology is modeled after the structure and function of the neocortex, and Numenta believes HTM “offers the promise of building machines that approach or exceed human level performance for many cognitive tasks.”

Using a method called “machine learning,” HTM systems literally “learn” to recognize patterns through observation. One aim is to develop a vision system that can reliably recognize faces, or technology that can recognize dangerous traffic situations.

The development of the HTM technology by Hawkins and colleagues entails applying this memory model to develop computers that are “trained” to recognize objects despite differences in size, position, and viewing angle. Such computers would be capable of executing four “basic functions”: 1) discovering causes in the world; 2) inferring causes of novel input; 3) making predictions; and 4) directing behavior. Numenta believes this kind of innovation would bring computers closer to being able to process problems previously thought to be exceedingly difficult, if not impossible, for machines to solve.

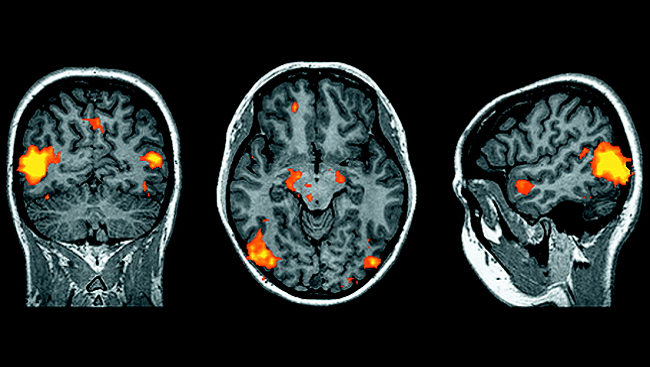

At the SfN lecture, Hawkins discussed efforts to develop hierarchical memory systems that capitalize on our understanding of maps of the mammalian neocortex. Research has revealed that the cerebral cortex in primates is organized as a “distributed hierarchical system” containing as many as ten distinct processing levels just within the visual system.

The potential that scientists anticipate for this emerging computer technology spans many areas — from the monitoring of data centers, to building a “smart car,” to online tracking of consumer behavior, to medical research and disease models for developing successful treatment approaches (for example, drug regimens).

A computer that can find abnormalities and process information based on pattern recognition and machine learning could yield significant findings and outcomes. For instance, Web and technology businesses, which store immense amounts of data using huge energy-consuming data centers, are seeking more efficient operations. Intelligent machines could reduce crashes or identify early warnings of equipment failure that would otherwise shut down those data centers, averting grave financial and business impact.

Computer applications of neuroscience research are only in their infancy but offer one example of a rich and growing interplay between technology, business, and science that will continue in decades to come. And it will likely continue to turn full circle — as technology continues to advance, neuroscientists will be able to expand their understanding of the brain, creating additional opportunities for further testing and advancement of biologically inspired technology.

CONTENT PROVIDED BY

BrainFacts/SfN

Also In Archives

Trending

Popular articles on BrainFacts.org