Brain-Controlled Prosthetics

- Published1 Apr 2009

- Reviewed1 Apr 2009

- Author Susan Perry

- Source BrainFacts/SfN

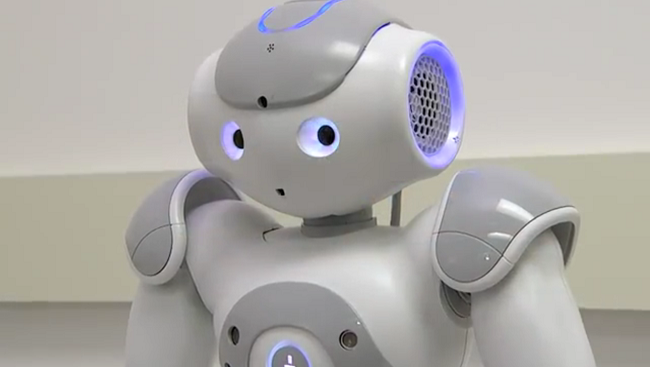

Mind over matter is no longer the stuff of science fiction alone. After years of basic research, neuroscientists have significantly advanced brain-computer interface (BCI) technology to the point where severely physically disabled people who cannot contract even one leg or arm muscle have independently composed and sent e-mails and operated a TV in their homes. They used only their thoughts to execute these actions.

A rhesus monkey with its arms gently restrained (and thus immobilized) feeds itself by operating a prosthetic arm with its mind. This research was supported by the National Institutes of Health, and shows how knowledge generated by neuroscience research on animals has led to important advances in understanding and treating physical disabilities.

Thoughts can operate machines. With the aid of a tiny brain implant known as a brain-computer interface (BCI), scientists have developed technology that enables communication between brain activity and an external device. People almost completely paralyzed by an earlier spinal cord injury have been able to turn on a television, access e-mail on a computer, play simple video games, and even grasp a piece of candy and hand it to someone using a robotic hand—all by thinking about such movements and then having a computer translate those thoughts into action.

Recent studies involving monkeys have shown that the brain can even accept a mechanical arm as its own, manipulating it like a normal limb to perform a complex motor task, such as grabbing and eating food.

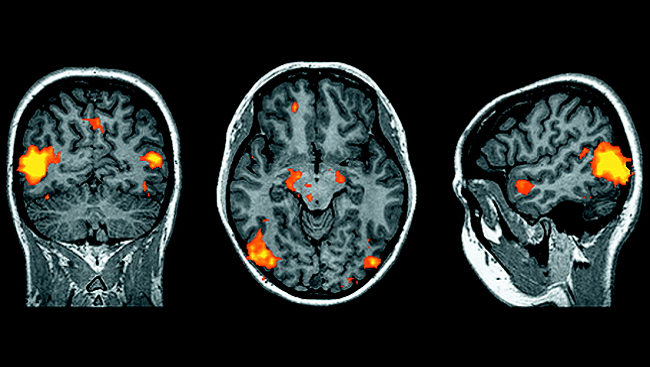

These and other remarkable advances in neural (brain-controlled) prosthetics are the result of decades of basic research into how the brain turns thought into physical action. Such findings demonstrate that movement areas of the brain continue to work years after the onset of paralysis. Although many technological hurdles remain, this research is leading to:

- New methods of restoring movement to people who are physically immobile due to injury or disease.

- Greater insight into how the brain organizes motor and other tasks.

As we maneuver through our daily environments, our brains are constantly taking what we see, hear, smell, taste, or touch and turning this information into split-second instructions that travel down our spinal cord and out to the muscles of our arms and legs, telling them how and when to move. We see a five-dollar bill on the ground? We stoop down and pick it up. We hear someone shouting, “Watch out!”? We quickly leap out of the way.

Such movements are not possible for the 250,000 Americans with spinal cord injuries, the 1.7 million Americans with limb loss, and others with neurological disorders such as brainstem stroke or amyotrophic lateral sclerosis (ALS) that can result in a devastating total-body paralysis known as “locked-in syndrome.” For people with these disabilities, the brain still fires up the neural signals to instruct an arm to pick up a five-dollar bill or a leg to step to the side, but those commands never make it to the appropriate muscles.

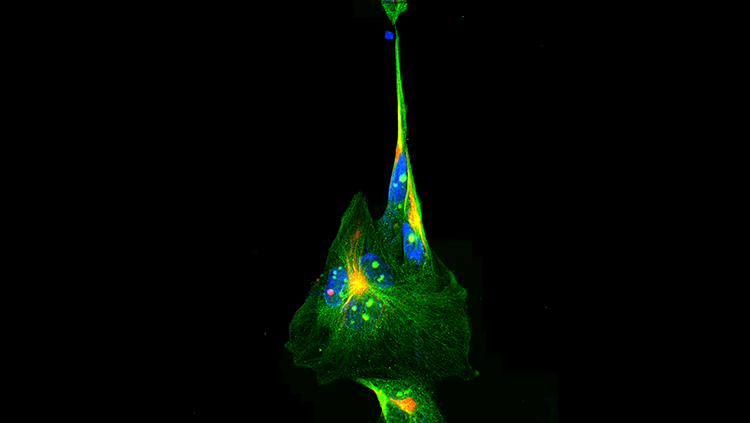

The aim of neural prosthetics is to send the brain’s “Get moving!” messages directly to either an artificial limb or to a paralyzed limb in which muscles are still working. To do this, scientists have developed sensors that detect and decode (with the aid of computer-programmed mathematical algorithms) the patterns of brain cell activity behind specific intended movements. Deciphering these neural signals is extraordinarily tricky. Even the simplest movement—say, lifting an arm—involves a host of interrelated intentions (how fast? what direction? what angle?) and thus the firing of millions of neurons.

Two basic types of sensors are used in neural prosthetics. One records the activity of many brain cells together, either through the scalp (a technology called electroencephalography, or EEG) or just under the scalp (electrocorticography, or ECOG). The other does the same with dozens or more hair-thin microelectrodes in an array about the size of an aspirin implanted directly into the brain’s motor cortex, the area associated with movement. Each microelectrode in the array detects the electrical impulses, called spikes, of single neurons as well as the more diffuse signals seen in the EEG or ECOG.

Both methods have their advantages and disadvantages. Because they take signals from a large area of the brain, the non-invasive EEG and the ECOG methods produce a less precise measurement of movement signals. The microelectrode array provides direct access to the signals related to movement, but must be inserted into brain tissue. Both types of sensors require daily recalibration, and their supporting equipment is bulky and non-portable. Attempts to build automated and portable wireless versions are now underway.

Scientists are also working hard to introduce sensory feedback into BCI technology. The goal: interactive devices—ones that not only send information from the brain to a robotic arm, but also from the arm to the brain. The user would then be able to know the mechanical limb’s location in space and time (an essential sensory feedback known as proprioception) as well as feel the sensation of touch—and, as a result, interact with his or her surroundings with the dexterity of an able-bodied person.

Scientists have already begun to record brain activity in the part of the cortex that processes proprioception, with the aim of creating computer algorithms that could be used to simulate the sensation in an artificial limb. And studies involving monkeys have been able to recreate touch. Although many challenges remain and advances will be incremental, researchers are optimistic that one day the integration of technology that enables communication between the brain and an external device will become a reality.

CONTENT PROVIDED BY

BrainFacts/SfN

Also In Archives

Trending

Popular articles on BrainFacts.org