Metacognition — I Know (or Don't Know) that I Know

- Published27 Feb 2012

- Reviewed27 Feb 2012

- Source Wellcome Trust

At New York University, Sir Henry Wellcome Postdoctoral Fellow Dr Steve Fleming is exploring the neural basis of metacognition: how we think about thinking, and how we assess the accuracy of our decisions, judgements and other aspects of our mental performance.

Metacognition is an important-sounding word for a very everyday process. We 'metacognise' whenever we reflect upon our thinking process and knowledge.

It's something we do on a moment-to-moment basis, according to Dr Steve Fleming at New York University. "We reflect on our thoughts, feelings, judgements and decisions, assessing their accuracy and validity all day long," he says.

This kind of introspection is crucial for making good decisions. Do I really want that bar of chocolate? Do I want to go out tonight? Will I enjoy myself? Am I aiming at the right target? Is my aim accurate? Will I hit it? How sure am I that I'm right? Is that really the correct answer?

If we don't ask ourselves these questions as a kind of faint, ongoing, almost intuitive commentary in the back of our minds, we're not going to progress very smoothly through life.

As it turns out, although we all do it, we're not all equally good at it. An example Steve likes to use is the gameshow 'Who Wants to be a Millionaire?' When asked the killer question, 'Is that your final answer?', contestants with good metacognitive skills will assess how confident they are in their knowledge.

If sure (I know that I know), they'll answer 'yes'. If unsure (I don't know for sure that I know), they'll phone a friend or ask the audience. Contestants who are less metacognitively gifted may have too much confidence in their knowledge and give the wrong answer - or have too little confidence and waste their lifelines.

Metacognition is also fundamental to our sense of self: to knowing who we are. Perhaps we only really know anyone when we understand how, as well as what, they think - and the same applies to knowing ourselves. How reliable are our thought processes? Are they an accurate reflection of reality? How accurate is our knowledge of a particular subject?

Last year, Steve won a prestigious Sir Henry Wellcome Postdoctoral Fellowship to explore the neural basis of metacognitive behaviour: what happens in the brain when we think about our thoughts and decisions or assess how well we know something?

Killer questions

One of the challenges for neuroscientists interested in metacognition has been the fact that - unlike in learning or decision making, where we can measure how much a person improves at a task or how accurate their decision is - there are no outward indicators of introspective thought, so it's hard to quantify.

As part of his PhD at University College London, Steve joined a research team led by Wellcome Trust Senior Fellow Professor Geraint Rees and helped devise an experiment that could provide an objective measure of both a person's performance on a task and how accurately they judged their own performance.

Thirty-two volunteers were asked to look at a series of two very similar black and grey pictures on a screen and say which one contained a brighter patch.

"We adjusted the brightness or contrast of the patches so that everyone was performing at a similar level," says Steve. "And we made it difficult to see which patch was brighter, so no one was entirely sure about whether their answer was correct; they were all in a similar zone of uncertainty."

They then asked the 'killer' metacognitive question: How sure are you of your answer, on a scale from one to six?

Comparing people's answers to their actual performance revealed that although all the volunteers performed equally well on the primary task of identifying the brighter patches, there was a lot of variation between individuals in terms of how accurately they assessed their own performance - or how well they knew their own minds.

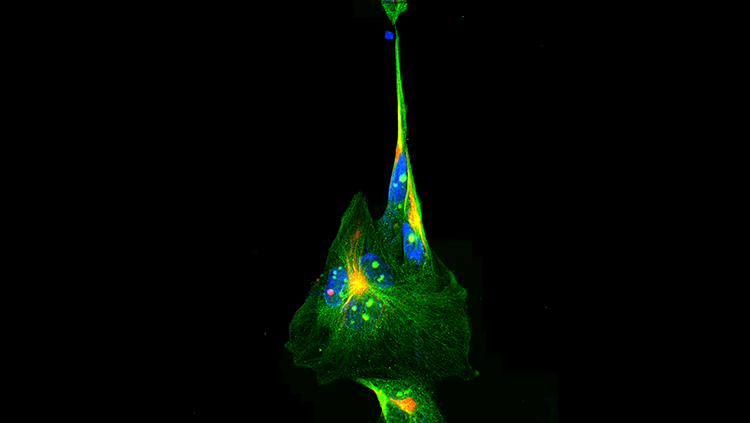

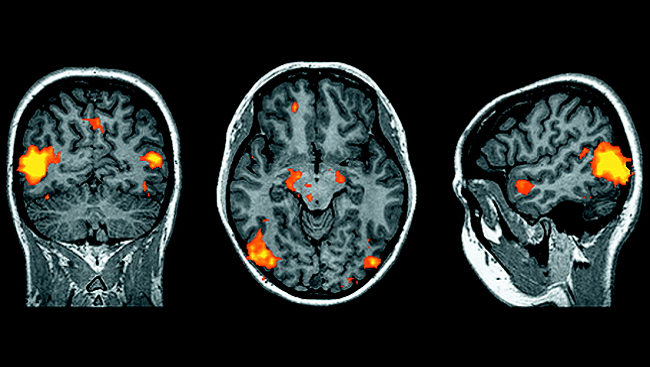

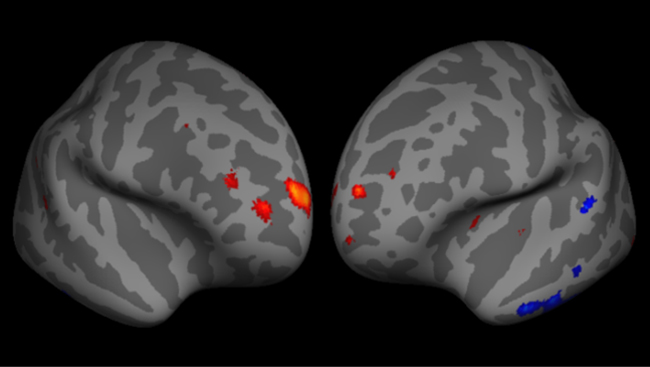

Magnetic resonance imaging (MRI) scans of the volunteers' brains further revealed that those who most accurately assessed their own performance had more grey matter (the tissue containing the cell bodies of our neurons) in a part of the brain located at the very front, called the anterior prefrontal cortex. In addition, a white-matter tract (a pathway enabling brain regions to communicate) connected to the prefrontal cortex showed greater integrity in individuals with better metacognitive accuracy.

The findings, published in 'Science' in September 2010, linked the complex high-level process of metacognition to a small part of the brain. The study was the first to show that physical brain differences between people are linked to their level of self-awareness or metacognition.

Intriguingly, the anterior prefrontal cortex is also one of the few parts of the brain with anatomical properties that are unique to humans and fundamentally different from our closest relatives, the great apes. It seems introspection might be unique to humans.

"At this stage, we don't know whether this area develops as we get better at reflecting on our thoughts, or whether people are better at introspection if their prefrontal cortex is more developed in the first place," says Steve.

I believe I do

Although this research and research from other labs points to candidate brain regions or networks for metacognition located in the prefrontal cortex, it doesn't explain why they are involved. Steve plans to use his fellowship to address that question by investigating the neural mechanisms that generate metacognitive reports.

He's approaching the question by attempting to separate out the different kinds of information (or variables) people use to monitor their mental and physical performance.

He cites playing a tennis shot as an example. "If I ask you whether you just played a good tennis shot, you can introspect both about whether you aimed correctly and about how well you carried out your shot. These two variables might go together to make up your overall confidence in the shot."

To evaluate how confident we are in each variable (aim and shot) we need to weigh up different sets of perceptual information. To assess our aim, we would consider the speed and direction of the ball and the position of our opponent across the net. To judge how well we carried out the actual shot, we would think about the position of our feet and hips, how we pivoted, and how we swung and followed through.

There may well have been some discrepancy between the shot we wanted to achieve and the shot we actually made. This is a crucial distinction for scientists exploring decision making. "Psychologists tend to think of beliefs, 'what I should do', as being separate from actions," explains Steve.

"When you're choosing between two chocolate bars, you might decide on a Mars bar - that's what you believe you should have, what you want and value. But when you actually carry out the action of reaching for a bar, you might end up reaching for a Twix instead. There's sometimes a difference there between what you should do and what you actually end up doing, and that's perhaps a crucial distinction for metacognition. My initial experiments are going to try to tease apart these variables."

Research into decision making has identified specific brain regions where beliefs about one choice option (one chocolate bar, or one tennis shot) being preferable to another are encoded. However, says Steve, "what we don't know is how this type of information [about values and beliefs] relates to metacognition about your decision making. How does the brain give humans the ability to reflect on its computations?"

He aims to connect the finely detailed picture of decision making given to us by neuroscience to the very vague picture we have of self-reflection or metacognition.

New York, New York

Steve is working with researchers at New York University who are leaders in the field of task design and building models of decision making, "trying to implement in a laboratory setting exactly the kind of question we might ask the tennis player".

They are designing a perceptual task, in which people will have to choose a target to hit based on whether a patch of dots is moving to the left or right. In other words, people need to decide which target they should hit (based on their belief about its direction of motion), and then they have to hit it accurately (action).

"We can use a variety of techniques to manipulate the difficulty of the task. If we make the target very small, people are obviously going to be more uncertain about whether they're going to be able to hit it. So we can separately manipulate the difficulty of deciding what you should do, and the difficulty of actually doing it."

Once the task is up and running, they will then ask the volunteers to make confidence judgements - or even bets - about various aspects of their performance: how likely they thought it was that they chose the right target, or hit it correctly. Comparing their answers with their actual performance will give an objective measure of the accuracy of their beliefs (metacognition) about their performance.

Drilling down

Such a task will mean Steve and his colleagues can start to decouple the perceptual information that gives people information about what they should do (which target to hit) from the perceptual information that enables them to assess the difficulty of actually carrying out the action (hitting the target).

And that in turn will make it possible to start uncoupling various aspects of metacognition - about beliefs, and about actions or responses - from one another. "I want to drill down into the basics, the variables that come together to make up metacognition, and ask the question: how fine-grained is introspection?"

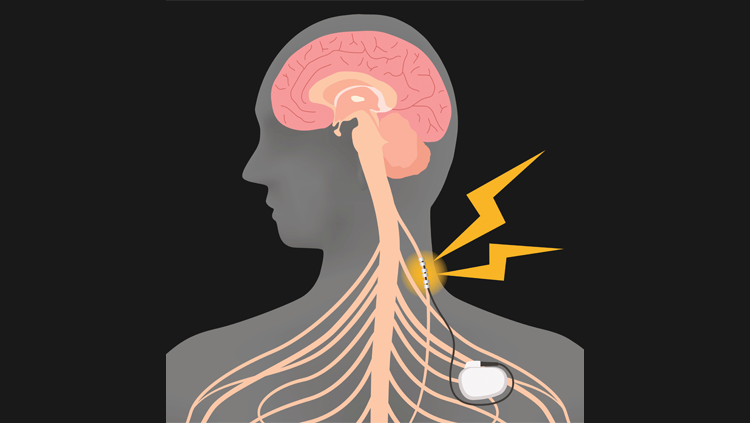

He'll then use a variety of neuroscience techniques, including brain scanning and intervention techniques such as transcranial magnetic stimulation (to briefly switch off metacognitive activity in the brain), to understand how different brain regions encode information relevant for metacognition. "Armed with our new task, we can ask questions such as: is belief- and action-related information encoded separately in the brain? Is the prefrontal cortex integrating metacognitive information? How does this integration occur? Answers to these questions will allow us to start understanding how the system works."

Since metacognition is so fundamental to making successful decisions - and to knowing ourselves - it's clearly important to understand more about it. Steve's research may also have practical uses in the clinic. Metacognition is linked to the concept of 'insight', which in psychiatry refers to whether someone is aware of having a particular disorder. As many as 50 per cent of patients with schizophrenia have profoundly impaired insight and, unsurprisingly, this is a good indicator of whether they will fail to take their medication.

"If we have a nice task to study metacognition in healthy individuals that can quantify the different components of awareness of beliefs, and awareness of responses and actions, we hope to translate that task into patient populations to understand the deficits of metacognition they might have." With that in mind, Steve plans to collaborate with researchers at the University of Oxford and the Institute of Psychiatry in London when he returns to finish his fellowship in the UK.

A science of metacognition also has implications for concepts of responsibility and self-control. Our society currently places great weight on self-awareness: think of a time when you excused your behaviour with 'I just wasn't thinking'. Understanding the boundaries of self-reflection, therefore, is central to how we ascribe blame and punishment, how we approach psychiatric disorders, and how we view human nature.

By Penny Bailey

CONTENT PROVIDED BY

Wellcome Trust