Modeling the Mind: Electric Neurons to Artificial Intelligence

- Published5 Nov 2025

- Author Lauren Drake

- Source BrainFacts/SfN

The brain's power source was a mystery until Luigi Galvani connected the dots between electricity and animals. The 18th-century physician was one of the first to use physics to explain the mammalian nervous system. With a scalpel and an electric shock, he found that sending a charge into nerves causes muscle spasms, demonstrating how animal movements follow the movement of electricity. Galvani’s simple electrical experiments paved the way for computational neuroscientists to delve into brain networks several centuries later.

Today, these researchers use engineering tools like computers and artificial intelligence to understand how electrical signals in the brain drive human experiences. But you don’t necessarily need to be a neuroscientist to study the brain — some of the greatest computational neuroscientists have been physicists and mathematicians. Computational neuroscience helps us understand the brain not only as an organ, but as a system.

Standard Models

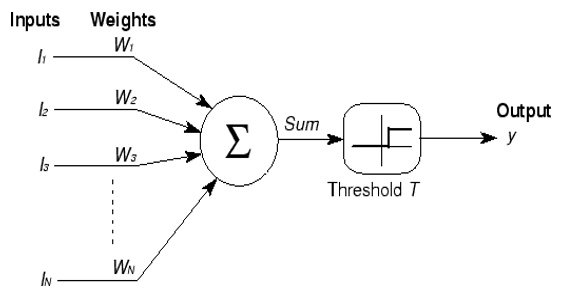

The brain is an electric system. The basic rules it follows to process information were unclear until the 20th century. Neuroscientist Warren McCulloch and logician Walter Pitts developed the first mathematical model explaining how neurons process information in 1943.

They modeled positive and negative inputs to a neuron, multiplied each of those by a number called a “weight” corresponding to that electrical signal’s strength or significance, and summed the total of these inputs. The sum led to one of two outcomes: Either the neuron fired, or it didn't. It all depended on whether the sum was higher than the cell's “threshold,” or the total input needed to cross the cell’s finish line and send a message.

The discovery turned the mystery of the mind into a legible map of connections resembling typical roadway infrastructure, defined by a figurative combination of stop signs, green lights, and merging lanes. This simple model, often referred to as the McCulloch-Pitts neuron, shed light on how neurons communicate. But scientists still lacked an explanation for how the cells "fire" those messages.

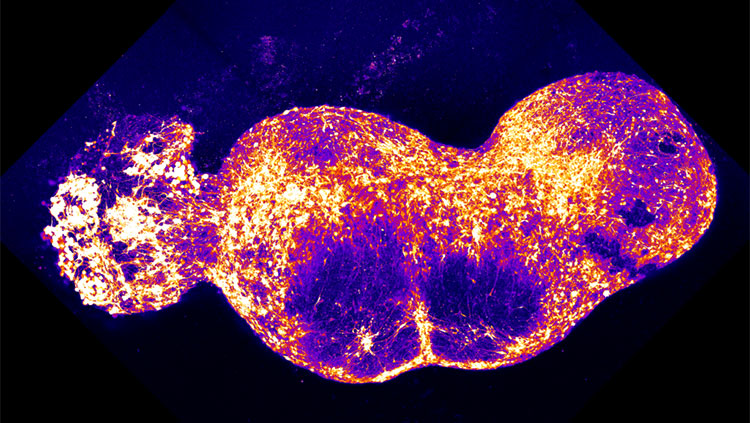

In 1952, researchers Alan Hodgkin and Andrew Huxley, both physiologists and biophysicists, used a squid axon to study the phenomenon. The squid’s axon was so big, it allowed them to directly observe electrical signals as they traveled down its tunnel.

By turning the axon’s cell membrane into a selective gate and writing new equations, they were able to discover the mechanism producing its action potential. What was once an unsolved, abstract biological phenomenon became a set of graphs tracking the movement of molecules.

With the cellular process simplified to a few variables, researchers could use the Hodgkin-Huxley Model to predict whether a neuron would fire using math. In defining the ion rush driving an action potential, Hodgkin and Huxley discovered the building block of neuron communication. Mapping this process would be impossible without mathematical modeling.

These two mathematical models defined basic functions of the brain. In the 21st century, modeling is still a key tool for computational neuroscientists. Researchers are constantly using data to create models visualizing phenomena from single neuron function to whole-brain disease dynamics.

Analyzing and Deciphering Data

Computational neuroscientists use various mathematical tools and computational strategies to process and analyze overwhelming amounts of data they gather from the brain.

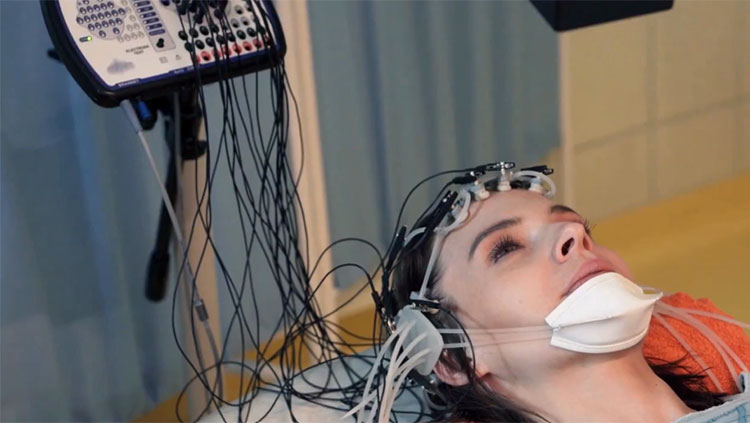

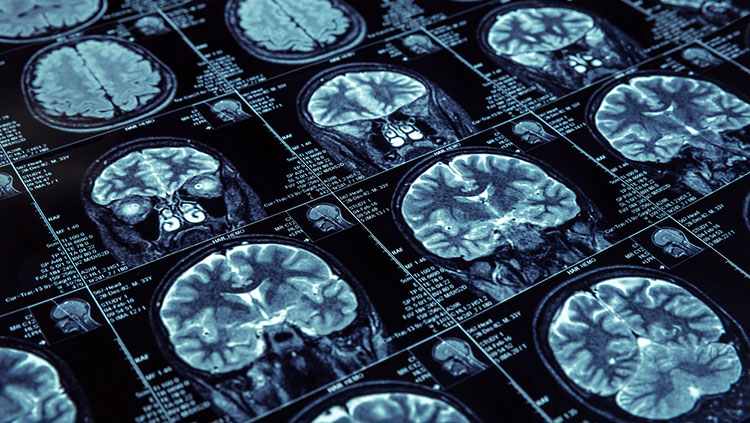

Researchers examining brain activity using EEG and MRI, for example, can use technology to decipher the information they collect. People in the field of connectomics, meanwhile, use a range of tools to uncover how the brain is intertwined in both its structure and function.

The brain contains billions of neurons and trillions of functional connections. Watching each neuron as a single variable is virtually impossible. This high-dimensional data can be difficult to understand and plot. So, researchers use tools and an approach called dimensionality reduction to filter thousands of variables into a few which are easier to understand.

Take learning a subway route as an analogy — you don’t need to see every train car, rider, and operator to figure out how to reach your destination. Reducing the dimensions down to one line on a straightforward map gets the point across. Taking the noise of millions of neurons and reducing it down to a few patterns similarly makes more sense for understanding complex brain networks.

Researchers also use neural decoding tools to translate the brain’s electrical signals into recognizable messages, turning meaningless neural signals into patterns that tell us what the brain is doing.

For example, electrodes placed on a person’s head record the brain’s electrical activity, producing pages of spikes and oscillations. Like Morse code, these signals can be decoded using computational models, translating these electrical signals into something understandable. Regression models or classifiers can predict what the data is communicating.

As researchers gather vast amounts of neural data and use models to decipher them, larger patterns of activity can reveal new insights into how we think.

Looking to the Future of AI

Artificial intelligence (AI) completes tasks that usually require human thought and action. AI has a fluid relationship with computational neuroscience. Brain-inspired modeling, like deep neural networks, shape AI systems. But as AI improves, some of its methods can also help assess loads of neural data and build predictive models.

With enough reliable data, trained AI models can help study the brain and identify patterns in data that a researcher may not have been able to spot on their own. Machine learning sorts through this information while also training itself to improve on its own functionality.

Convolutional neural networks (CNNs), for example, mimic how the brain processes vision. They break an image down into grids and layers and use that information to recognize objects. These networks use association to solve lots of problems, from finding something wrong in an x-ray to spotting a road hazard.

Large language models (LLMs), another powerful set of tools, draw on datasets of existing text to predict the next word in a sentence. This type of AI system powers advanced chatbots, but they can be useful for neuroscience researchers, too.

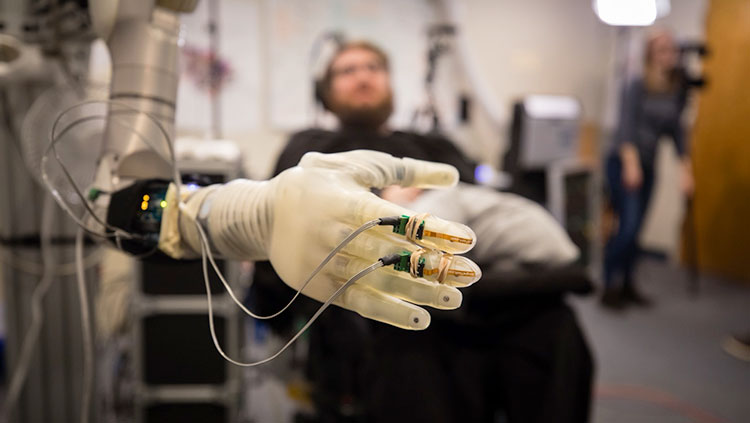

Some groups have paired decoding tools with LLM processing to assess brain activity recordings. The approach allows the system to translate a person’s thoughts into text in a matter of seconds, paving the way for new brain-computer interfaces (BCIs) designed to improve communication options for people living with paralysis. AI is accelerating the progress of BCIs by streamlining and decoding the brain signals used to power these interfaces, which can also include devices designed to improve mobility, like robotic arms.

AI is not only inspired by the brain but is also becoming a vital tool to study it. Together, researchers and AI can demystify patterns in the human brain to advance medicine and technology.

CONTENT PROVIDED BY

BrainFacts/SfN

References

Awuah, W. A., Ahluwalia, A., Darko, K., Sanker, V., Tan, J. K., Tenkorang, P. O., Ben-Jaafar, A., Ranganathan, S., Aderinto, N., Mehta, A., Shah, M. H., Lee Boon Chun, K., Abdul-Rahman, T., & Atallah, O. (2024). Bridging minds and machines: The recent advances of brain–computer interfaces in neurological and neurosurgical applications. World Neurosurgery, 189, 138–153. https://doi.org/10.1016/j.wneu.2024.06.063

Bouhsissin, S., Assemlali, H., & Sael, N. (2025). Enhancing road safety: A convolutional neural network based approach for road damage detection. Machine Learning with Applications, 20, 100668. https://doi.org/10.1016/j.mlwa.2025.100668

Gerstner, W., Kistler, W. M., Naud, R., & Paninski, L. (2014). The Hodgkin–Huxley model (Chapter 2.2). In Neuronal dynamics: From single neurons to networks and models of cognition. Cambridge University Press. Retrieved September 10, 2025, from https://neuronaldynamics.epfl.ch/online/Ch2.S2.html

Herculano-Houzel, S. (2009). The human brain in numbers: A linearly scaled-up primate brain. Frontiers in Human Neuroscience, 3(31), 1-11. https://doi.org/10.3389/neuro.09.031.2009

Human Brain Project. (n.d.). Human Brain Project. Retrieved September 10, 2025, from https://www.humanbrainproject.eu/en/

Lindsay, G. (2021). Models of the mind: How physics, engineering and mathematics have shaped our understanding of the brain. Bloomsbury Sigma.

Lindsay, G. W. (2021). Convolutional neural networks as a model of the visual system: Past, present, and future. Journal of Cognitive Neuroscience, 33(10), 2017–2031. https://doi.org/10.1162/jocn_a_01544

Littlejohn, K. T., Cho, C. J., Liu, J. R., Silva, A. B., Yu, B., Anderson, V. R., Kurtz-Miott, C. M., Brosler, S., Kashyap, A. P., Hallinan, I. P., Shah, A., Tu-Chan, A., Ganguly, K., Moses, D. A., Chang, E. F., & Anumanchipalli, G. K. (2025). A streaming brain-to-voice neuroprosthesis to restore naturalistic communication. Nature Neuroscience, 28, 902–912. https://doi.org/10.1038/s41593-025-01905-6

Narin, A., Kaya, C., & Pamuk, Z. (2021). Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks. Pattern Analysis and Applications, 24, 1207–1220. https://doi.org/10.1007/s10044-021-00984-y

Ye, Z., Ai, Q., Liu, Y., de Rijke, M., Zhang, M., Lioma, C., & Ruotsalo, T. (2025). Generative language reconstruction from brain recordings. Communications Biology, 8, 346. https://doi.org/10.1038/s42003-025-07443-3

What to Read Next

Also In Tools & Techniques

Trending

Popular articles on BrainFacts.org